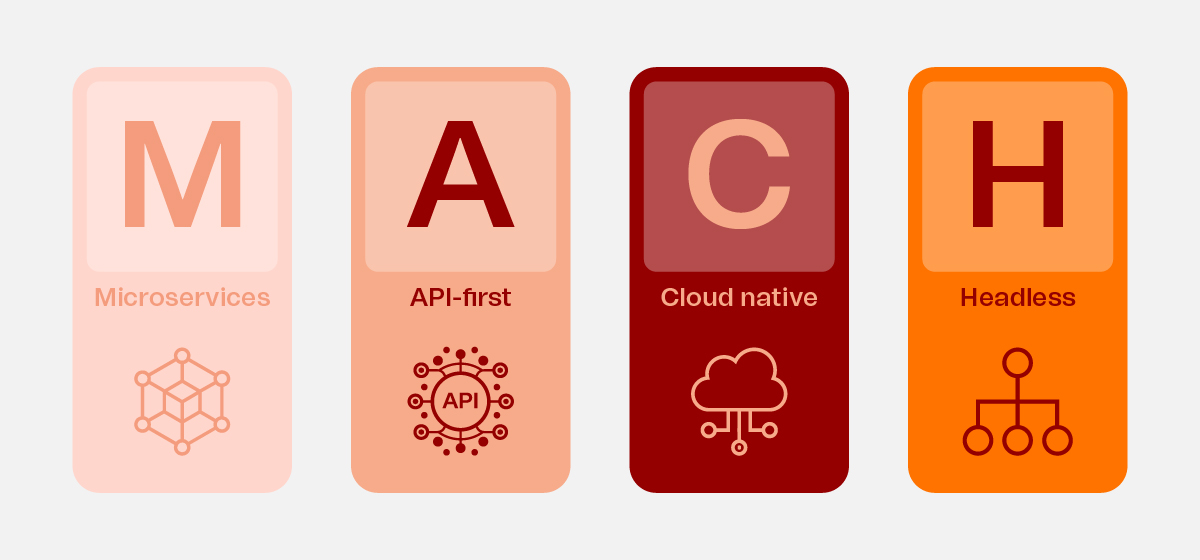

Beginning Your Composable Journey

Let the exercise begin! At first glance, this process might appear to be a highly structured and meticulously documented assessment — one that can be followed step by step without any kind of deviation. However, reality always more complex.... While there are several clearly defined steps in the process, the structure often becomes disrupted by the nuances, specific challenges, and individual circumstances unique to the client and internal way of working.